How to lower the cost of kubernetes GKE by killing nodes in specific time windows without using any external tool or API.

Our problem arises when we have a test environment (QA) that is only used during business hours and being connected 24x7 generates an unnecessary cost.

Shutting down the test environment outside office hours, eliminating nodes from our cluster. Arriving close to the start of business hours, these nodes must be created and the test environment must return to operation.

For this to be possible we need to have three configuration points:

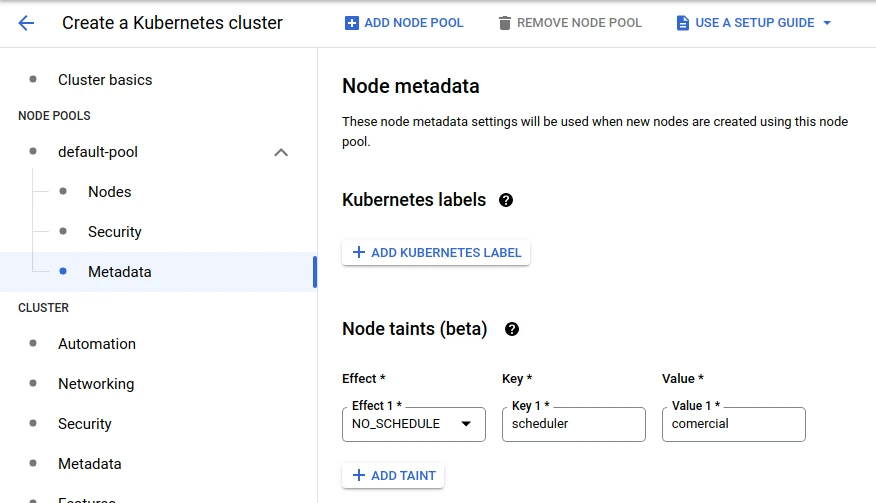

1- A separate “node pool” that can be eliminated without affecting production. To make this configuration more effective you can include Taints and Tolerantios.

2- A common label for all deploys/sts that may be shut down at this time. Example: “scheduler=comercial”

3- Know the list of namespaces that contain the deploys/sts that will be deactivated.

We need to make sure the nodes and deploys are configured correctly.

Let’s first list our node pools and validate that our environment is prepared:

> gcloud container node-pools list --cluster us-gole-01

NAME MACHINE_TYPE DISK_SIZE_GB NODE_VERSION

pool-main c2-standard-8 100 1.20.10-gke.1600

pool-qa e2-standard-8 80 1.20.10-gke.1600

If the node-pool does not exist, it is necessary to create this node pool with the corresponding number of nodes, which can only be one, and add the taint:

If the node-pool already exists but does not have the necessary taint, then use the gcloud feature still in beta to add the taint:

> gcloud beta container node-pools update pool-qa --node-taints='scheduler=comercial:NoSchedule'

Now let’s validate that all the deploys we want contain the necessary labels.

In this example we won’t have statefulset in our list, but logically it fits perfectly.

> kubectl -n namespace-a get,sts deploy -l scheduler=comercial

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

namespace-a deployment.apps/gole-sonarcube 1/1 1 1 34d

namespace-a deployment.apps/gole-redis 1/1 1 1 34d

namespace-a deployment.apps/gole-nodejs-app 1/1 1 1 34d

> kubectl -n namespace-a get,sts deploy -l scheduler=comercial

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

namespace-b deployment.apps/gole-netbox-worker 1/1 1 1 34d

namespace-b deployment.apps/gole-netbox 1/1 1 1 7d18h

We need to make sure the deploys are configured correctly

❯ kubectl -n namespace-a get deploy -l scheduler=comercial -o=jsonpath='{.spec.template.spec.tolerations}'

The output should be an object like this below times the number of existing deploys. They will all be within the same list, but looking at the list it is possible to observe easily.

{"effect":"PreferNoSchedule","key":"scheduler","operator":"Equal","value":"comercial"}

Here is an example of where tolerations should be configured:

apiVersion: apps/v1

kind: Deployment

metadata:

name: gole-app

labels:

scheduler: comercial

app: gole-app

tier: api-extended

spec:

progressDeadlineSeconds: 600

replicas: 3

revisionHistoryLimit: 10

selector:

matchLabels:

scheduler: comercial

app: gole-app

tier: api-extended

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

labels:

scheduler: comercial

app: gole-app

tier: api-extended

spec:

tolerations:

- key: "scheduler"

operator: "Equal"

value: "comercial"

effect: "PreferNoSchedule"

containers:

So now we can get started.

For the implementation of the solution we will need to create some resources:

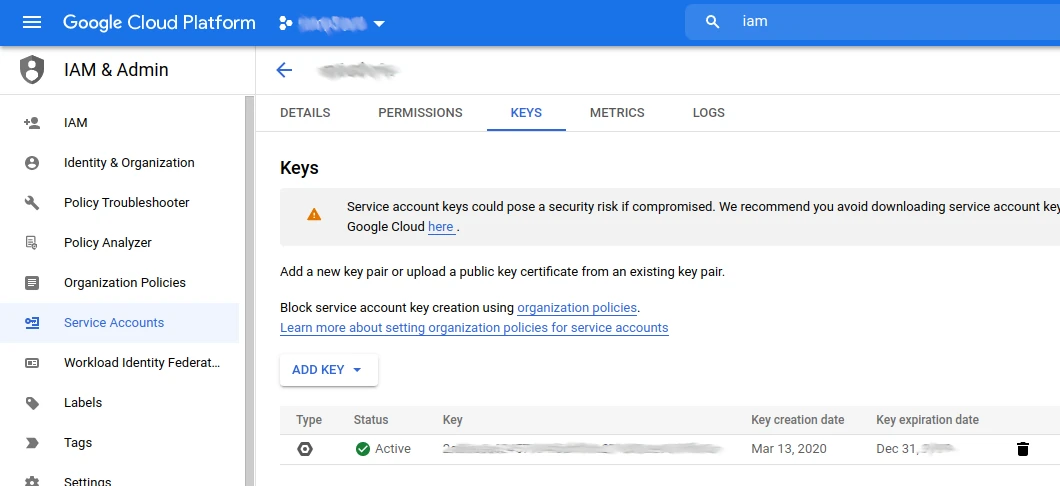

Our secret will be the file renamed to key.json generated in service account, keys inside IAM.

Logically this system user should have permission to administer the kubernetes cluster using the gcloud sdk.

> cat key.json

{

"type": "service_account",

"project_id": "gole-autoscaling-sample",

"private_key_id": "asdfasdfasdfasdasdfasdasdf....",

"private_key": "-----BEGIN PRIVATE KEY-----\ndasdfasdf393993..asdfsf.aksdflksjdflksjfkjafljasdjkf....Ijr7ZCBgpbQrDH\nvNUw/JxaVbLtpvy3KSmYpjGfKnHFs+wPQi+NFmwrdOZHvKjdtRNxHvPqgWNxCSAh\nMwEB8cKs0dzif1Hbg7EtYrZOR8g7LZrTD3c4lTsahMyI9x3kN0aCe5QXDXvtPEJ1\n3s5XFBriQc1tmHwMEV4VW8s=CONTINUA....\n-----END PRIVATE KEY-----\n",

"client_email": "infra@golesuite.com",

"client_id": "11223344556677889900",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/blablabla"

}

Let’s then add this file as a secret in a specific namespace for our automation.

> kubectl -n devops create secret generic gcloud-key --from-file=./key.json

Checking the created key:

> kubectl -n devops get secret gcloud-key -o=jsonpath='{.data.key\.json}' | base64 -d

Note: Ensure that only administrators can view the content of the used namespace. In this case we will use the devops namespace

Let’s now create a yaml file that uses kind Cronjob.

Our cronjob is set to:

apiVersion: batch/v1beta1

kind: CronJob

metadata:

labels:

role: devops

owner: gole

name: qa-env-shutdown

namespace: devops

spec:

schedule: "00 22 * * 1,2,3,4,5"

concurrencyPolicy: Forbid

jobTemplate:

metadata:

labels:

role: devops

owner: gole

spec:

template:

spec:

restartPolicy: Never

containers:

- name: gke-operator

image: alpine

command:

- "/bin/sh"

args:

- -c

- "apk add --no-cache curl bash ; \

curl -O https://raw.githubusercontent.com/golesuite/gcloud-gke-scheduling/main/alpine-gcloud.sh ; \

chmod +x ./alpine-gcloud.sh ; \

./alpine-gcloud.sh"

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /etc/localtime

name: tz-config

- name: gcloud-key

mountPath: /etc/gcloud/

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

env:

- name: SCALE_DEPLOY_NUMBER

value: "0"

- name: SCALE_STS_NUMBER

value: "0"

- name: SCALE_NODES_NUMBER

value: "0"

- name: GCLOUD_ZONE

value: "southamerica-east1-a"

- name: CLUSTER_NAME

value: "br-gole-01"

- name: SCHEDULER_LABEL

value: "sheduler=comercial""

- name: SCHEDULER_POOL

value: "pool-qa"

- name: PROJECT_ID

value: "gole"

volumes:

- name: tz-config

hostPath:

path: /usr/share/zoneinfo/America/Sao_Paulo

- name: gcloud-key

secret:

secretName: gcloud-key

---

apiVersion: batch/v1beta1

kind: CronJob

metadata:

labels:

role: devops

owner: gole

name: qa-env-startup

namespace: devops

spec:

schedule: "30 10 * * 1,2,3,4,5"

concurrencyPolicy: Forbid

jobTemplate:

metadata:

labels:

role: devops

owner: gole

spec:

template:

spec:

restartPolicy: Never

containers:

- name: gke-operator

image: alpine

command:

- "/bin/sh"

args:

- -c

- "apk add --no-cache curl bash ; \

curl -O https://raw.githubusercontent.com/golesuite/gcloud-gke-scheduling/main/alpine-gcloud.sh ; \

chmod +x ./alpine-gcloud.sh ; \

./alpine-gcloud.sh"

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /etc/localtime

name: tz-config

- name: gcloud-key

mountPath: /etc/gcloud/

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

env:

- name: SCALE_DEPLOY_NUMBER

value: "1"

- name: SCALE_STS_NUMBER

value: "1"

- name: SCALE_NODES_NUMBER

value: "1"

- name: GCLOUD_ZONE

value: "southamerica-east1-a"

- name: CLUSTER_NAME

value: "br-gole-01"

- name: SCHEDULER_LABEL

value: "scheduler=comercial""

- name: SCHEDULER_POOL

value: "pool-qa"

- name: PROJECT_ID

value: "gole"

volumes:

- name: tz-config

hostPath:

path: /usr/share/zoneinfo/America/Sao_Paulo

- name: gcloud-key

secret:

secretName: gcloud-key

You can see that our cronjob uses two external mount points:

Our tz-config is a pointer to the zoneinfo file found on any OS. Linux, in this case we are communicating to our cronjob that your time zone is America/Sao_Paulo.

Our gcloud-key uses the secret model, and this is where we will link to the secret created in the previous step.

| ENV Variables | Required | example value |

|---|---|---|

| SCALE_DEPLOY_NUMBER | X | 0 |

| SCALE_STS_NUMBER | X | 0 |

| SCALE_NODES_NUMBER | X | 0 |

| PROJECT_ID | X | gole |

| CLUSTER_NAME | X | br-gole-01 |

| GCLOUD_ZONE | X | southamerica-east1-a |

| SCHEDULER_LABEL | X | scheduler=comercial |

| SCHEDULER_POOL | X | pool-qa |

Before creating and applying the cronjob file we must change the value of the variables.

This is located within env:

env:

- name: SCALE_DEPLOY_NUMBER

value: "1"

- name: SCALE_STS_NUMBER

value: "1"

- name: SCALE_NODES_NUMBER

value: "1"

- name: GCLOUD_ZONE

value: "southamerica-east1-a"

- name: CLUSTER_NAME

value: "br-gole-01"

- name: SCHEDULER_LABEL

value: "scheduler=comercial""

- name: SCHEDULER_POOL

value: "pool-qa"

- name: PROJECT_ID

value: "gole"

Once all the steps have been followed, we are ready to apply our cronjob.

I would just like to tell you where the commands are that actually do the work of bringing down and uploading the environment, since all we’ve done so far is just preparing the infrastructure.

The commands that will be executed to perform the autoscaling task are described in this git project (it’s the same project):

The alpine-gcloud.sh file is being called within Cronjob in the commands session.

The content of the file contains the sequence of commands that will do our job.

There are two main steps:

1- shutdown.

2- startup

Everything else that we create contains the structure that we will use so that these commands can be executed from within our cluster.

Since we’ve already saved our cronjob file locally, and we’ve already modified the variables, times and namespaces, let’s apply them.

Note that the cronjob execution times point to 3h more, this is because the control APIs of the GKE nodes use UTC time. Since TZ values for cronjobs are still in beta, the safest thing is to add an extra 3h and we’ll arrive at the correct time.

That is, if you want 8h and you are in a city GMT -3, then 8 + 3 = 11.

To start we will have (07:30h):

schedule: "30 10 * * 1,2,3,4,5"

To turn off (19:00h):

schedule: "00 22 * * 1,2,3,4,5"

> kubectl apply -f cronjob.yaml

> kubectl -n devops get cronjob

When it runs we will have a job running:

> kubectl -n devops get job

Logically, the job starts a pod and with that we can follow the logs.

Change the times so that they run at times when you are working, in order to validate that the processes run correctly.

This article includes:

There are many ways to create and administer a Kubernetes cluster, so don’t limit yourself to creating different solutions or ways to apply your solutions.

Use and abuse the resources of the APIs.

Access our page Contact and chat with us. We will be happy to serve you.

Success!